AI Therapists

Accessing mental health care, or any kind of health care is a privilege that many people do not have. There are programs that exist that offer free or low-cost mental health care, but with the AI bubble bubbling, some people have mistakenly turned towards AI chatbots as stand-ins for trained, professional, licensed, human therapists, which has led to some fatal results, as well as a worsening of mental illness in many users.

Skepticism persists due to OpenAI's history of prioritizing profit over safety, despite its nonprofit origins.

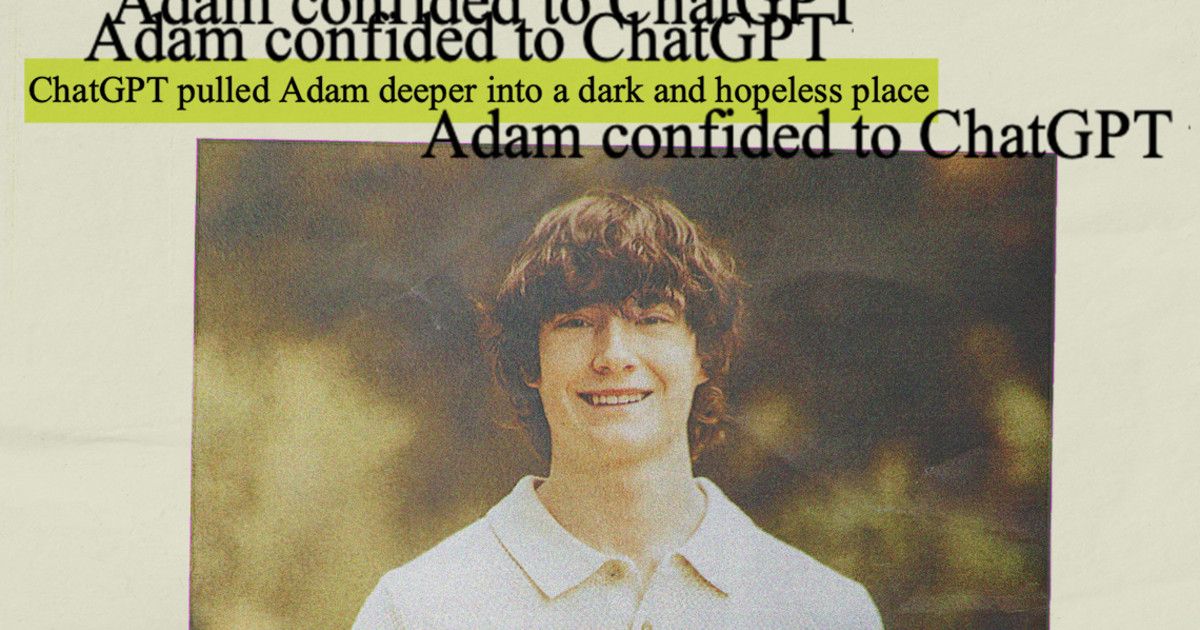

(2025): "one of the hazards is metaphysical, which is that they aren’t people, they aren’t conscious, and so you lose the value of dealing with a real person, which has an intrinsic value. The practical concern is this: We benefit from friction, from relationships, from people who call us out on our bullshit, who disagree with us, who see the world in different way, who don’t listen to every story we tell, who have their own things to say. People who are different from us force us to extend and grow and get better. I worry that these sycophantic AIs, with their “what a wonderful question!” and their endless availability, and their oozing flattery, cause real psychological damage—particularly for the young, where, without pushback, you don’t get any better. And these things do not offer pushback."

(2025): "The mass adoption of large language model (LLM) chatbots is resulting in large numbers of mental health crises centered around AI use, in which people share delusional or paranoid thoughts with a product like ChatGPT — and the bot, instead of recommending that the user get help, affirms the unbalanced thoughts, often spiraling into marathon chat sessions that can end in tragedy or even death."